“Surely if our localized UX is acting up, we’ll hear about it soon enough?”

Not so fast!

User feedback is great, but waiting until enough people complain about localization issues isn’t a winning strategy. Do you really want to hear about problems days, weeks, or months after launch? Just think how many users will drop off before it comes to light.

The truth is, most people won’t bother to complain. They’ll just drop off without a word.

As Joe Speck, Global Web Platforms Manager at Wall Street English, puts it, “We can’t just rely on site visitors to flag problems. Consistency across 35 sites is already hard enough to manage. If a localization error slips through, we might not see it until much later.

Those first impressions last, so it’s unlikely you’ll get a second chance to impress. As research shows, 88% of users say they won’t return after a bad experience.

With that in mind, if you’re launching in new markets or updating your website or app, can you really afford to neglect localization testing?

👎 Localization errors are a churn trigger 🔗

We’re imperfect humans living in an imperfect world. Until that changes (which it won’t), most new UX releases will have at least a few localization issues that don’t get picked up during the development phase.

That’s why it pays to test baby, test! Don’t mistakenly think that fancy new automation tools will pick up all the bloopers.

As useful as AI is, it can’t catch all localization bugs. Robots don’t understand cultural nuances like we do. You need a real human translator to cast an eye over it if you want perfection. As well as missing cultural nuance, automation tools don’t pick up on other common localization problems, such as…

Clunky translations that sound unnatural 🔗

A funny, but slightly cringe, example is when KFC's slogan “Finger-lickin’ good” was translated in China as the darkly sinister: “Eat your fingers off.” Coors messed up too, when their slogan “Turn it loose” was translated into Spanish as “Suffer from diarrhea.” Not a great advert for their beer! 😬

Badly-translated idioms 🔗

The English idiom “it’s raining cats and dogs” is meaningless if translated literally in China. They would probably wonder why cats and dogs are falling from the sky. In instances like these, idioms can lose meaning (or even change it) across locales.

Cultural slip-ups 🔗

This encompasses words, colors, symbols, or images that confuse or offend. Nike faced backlash in 2019 after the sole design on its Air Max 270 resembled the Arabic word for “Allah.” In Arab culture, showing or stepping on the sole is highly offensive, so this sparked outrage and led to a petition with over 48,000 signatures. The brand apologized and clarified it was unintentional, but the damage was done.

Formatting mix-ups 🔗

Dates, times, currencies, or numbers can also lead to UX confusion. For example, 05/09 is May 9 in the US, but 5th Sept in the UK.

Broken UIs 🔗

German, Finnish, or Russian words are often much longer than English ones, which means buttons or labels might overflow when translated. Languages like Chinese or Japanese are shorter and could leave odd gaps. Arabic and Hebrew read right to left, which can lead to UI misalignments.

Sloppy localization, including text that overflows, cut off buttons, or layouts that don't align, doesn’t inspire trust and loyalty. In fact, it does the opposite and ramps up customer churn. Recent research shows that 24% of users turn away from brands with poor localization practices.

It’s not worth the risk of waiting it out and relying on user feedback. Localization testing before launch is a much smarter strategy.

24% of users turn away from brands with poor localization practices. A broken or misaligned UI, unnatural translations or just bad formatting can ramp up customer churn — that's why testing ends up paying off

👁️ What is localization testing? 🔗

Localization testing catches all those pesky localization errors before you put them out into the world.

It’s a bit like using quality assurance (QA) processes to find and correct software bugs, but instead of hunting down coding errors that crash your product, you’re looking for translation errors, cultural issues, and UI blips that might annoy or confuse people.

Localization testing is often referred to as global UX testing, as it involves checking whether the overall experience feels right for users in each market.

🔎 Automated checks to catch the basics 🔗

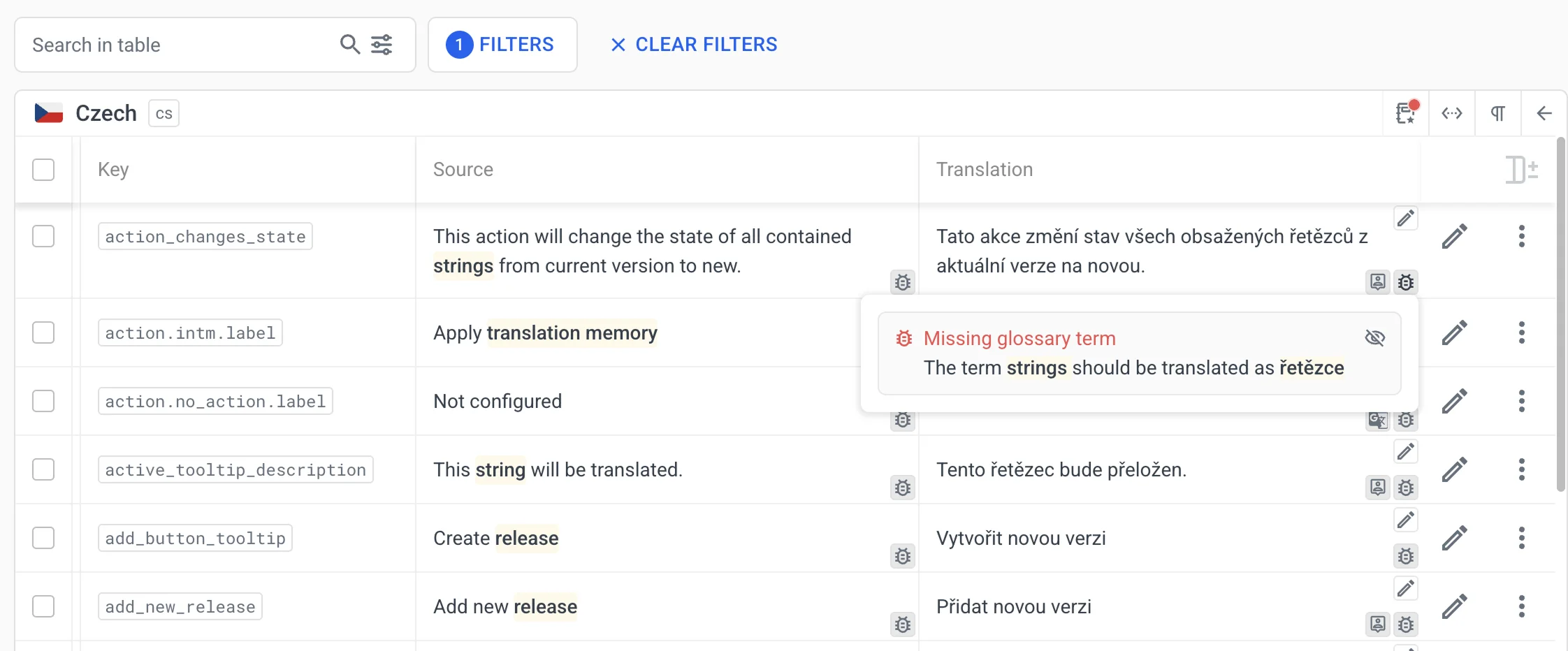

Automated checks are like a spell-check for your localized product. They apply localization QA rules to scan for common mistakes. Tools like Localazy have this feature built-in, helping you catch glaring errors early on.

They flag things like common translation errors, placeholder mismatches, missing punctuation marks, awkward spacing, or formatting errors.

However, these checks are like an initial safety net and don’t catch everything. You need humans to go through everything with a fine-tooth comb if you want high-quality localization. 🪮

In-context & pseudo-testing for previews 🔗

Reading translations in a spreadsheet is a totally different experience from seeing them out there in the “wild” (that is, actually in your product).

Using an in-context preview tool lets you drop translations into the real layout, making it easy to spot broken buttons, odd spacing, gaps, or text that doesn’t quite fit. Integrations with popular tools like Figma make it even easier.

You can also use pseudo-localization, a clever trick that involves adding fake text or special characters to mimic real translations. This stretches out words, adds accents, and tests encoding so you can catch the ugly stuff early on, like cut-off labels or characters that refuse to render properly. For example, “Account Settings” could be written as something like [!!! Àççôûñţ Šéţţîñĝš !!!].

Human review for nuance 🔗

Automation can’t tell you when something simply sounds wrong. That’s where human reviewers come in. They make sure the tone feels natural and that any idioms make sense, a process known as linguistic testing. It focuses on grammar, tone, meaning, and flow so the product feels like it was built for the target market from day one. If there are cultural references, reviewers ensure they land the way they should and don’t cause confusion or offense.

Continuous Integration with development 🔗

A Continuous Integration (CI) approach offers the best results. It means localizing your product as it’s built and updated. You can use tools that take new strings straight from your code and feed them into the localization platform and back again.

A good CI workflow means translations get tested as part of your regular build, rather than being bolted on at the end. It leads to faster feedback and fewer last-minute surprises. It means you squash localization bugs early before they get a chance to annoy your audience.

⚙️ See some examples of CI technical workflows in localization here

Task automation to save time 🔗

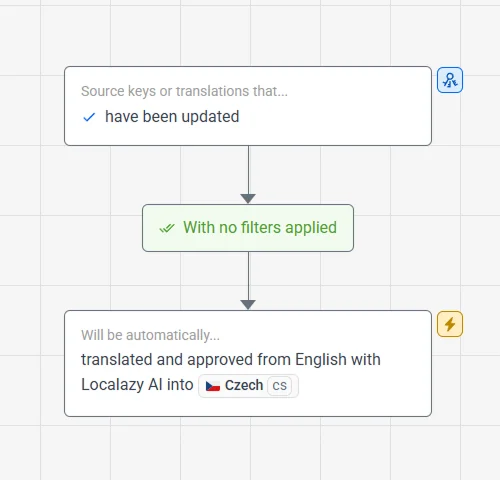

As well as automated checks at the beginning, you can also automate some of the dull, repetitive stuff, such as syncing files, spotting duplicates, or running machine or AI-powered pre-translations. Using smart automations like these frees your team from some of their busywork to focus on the things where they can bring real value, like optimizing content and UX.

🐞 How to catch issues before users spot them 🔗

Doing localization testing and management manually is a bit of a nightmare. It swallows up hours of a developer’s schedule, which is time that would be better spent coding and adding new features. The added pressure on development teams means that localization errors often slip through the net.

Continuous localization helps to stop the last-minute rush to fix errors or waiting for users to complain. It builds translation and QA into the development pipeline so that every new feature launch and update gets localized and tested as part of the release cycle.

Doing localization testing and management manually is a bit of a nightmare. With a continuous localization approach, errors get fixed, features are developed and testing is carried out all in paralel

Platforms like Localazy make continuous localization testing and fixing much easier to manage. For example, Wall Street English used the Localazy’s Strapi integration to cut hours of manual work. “We translated and deployed our content to create three new sites at the click of a button,” says Speck.

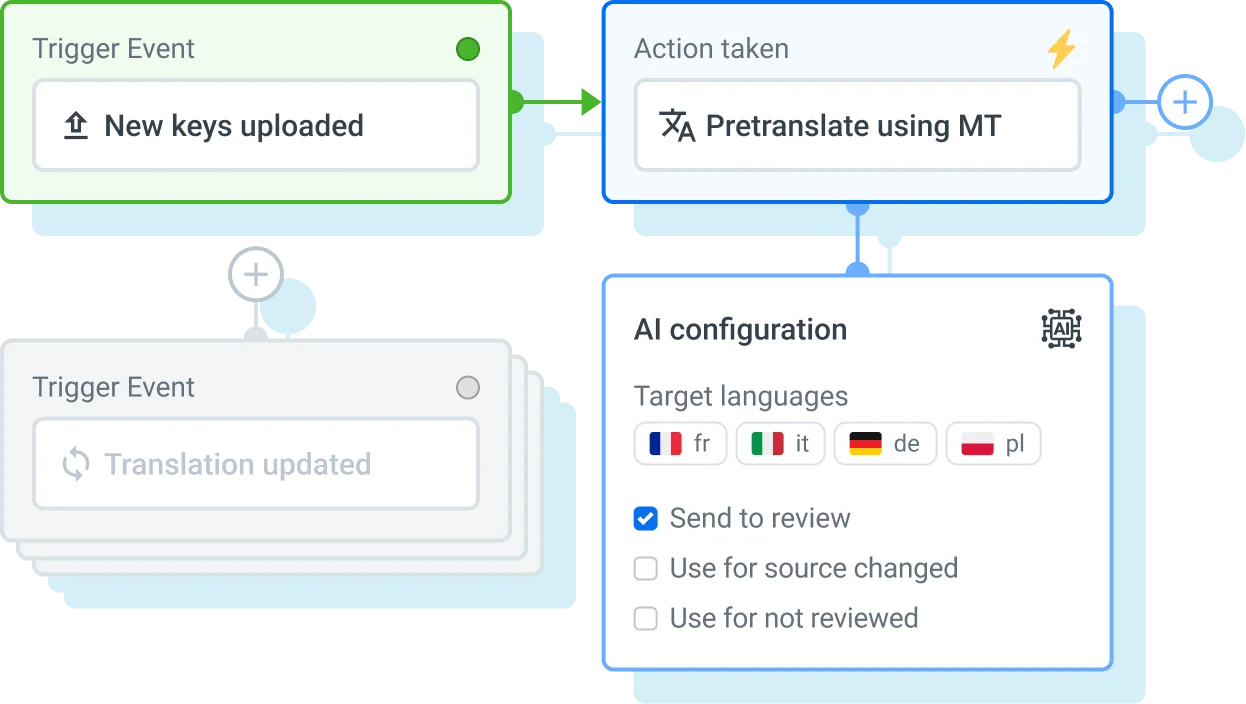

You can set up Localazy so that it automatically translates new strings as soon as they appear in your project. It pulls from trusted MT engines (like DeepL, Amazon, or Azure) or from the Localazy AI model, and cross-checks with its own shared translation memory to give you an instant first draft. 📑

Localazy also runs automated QA rules in the background to flag common UI and UX problems. These are quick wins that otherwise slip through unnoticed.

Post-editing with translators & reviewers 🔗

After producing the first automated draft, Localazy lets you layer human input on top of the machine output:

✍️ Remote translators

The machine does a first pass, then a human linguist checks context, punctuation, placeholders, and fixes anything that feels off.

📝 Remote reviewers

Human reviewers run QA on translations (whether machine or human-generated) to catch consistency issues or tone mismatches.

The best thing is, you don’t have to hire and manage reviewers manually, as it’s all built into Localazy’s Autopilot service. For markets where you really don’t want to compromise on quality (your core revenue regions), Localazy connects you directly with vetted professional translators. They’ll handle the cultural adaptation and style to make sure it aligns perfectly with your brand voice.

Everything is managed within the platform workflows, so you don’t waste time engaging and managing freelancers or agencies.

Alternatively, you can opt for a machine translation approach using Localazy AI and style guides. It’s ideal for lower risk products or for markets where speed and scalability are more important than perfection.

💙 Give Localazy a try today and see how it can improve your localization testing workflows. It’s free for the first 14 days!

?FAQ 🔗

Do I really need localization testing if my product is small? 🔗

Yes. Even a lightweight app or website can lose users fast if the translation feels off or the UI breaks. Small multilingual products often rely on first impressions and you may only get one shot.

Isn’t machine translation good enough now? 🔗

MT has come a long way, but it still misses nuance, idioms, and cultural fit. Machines catch the easy stuff, then humans add the finishing touches to make it sound natural. The winning formula is using both.

Can’t I just rely on user feedback to catch issues? 🔗

Not safely. Most people won’t tell you when something feels wrong: they’ll just run away. Waiting for complaints means losing users you might never win back.

How much does localization testing cost? 🔗

It depends on your product size and number of languages. Doing it manually can add up — for example, five hours of testing across five languages at around $50 per hour, plus another eight hours of human review per language, could easily reach $2,800 or more.

But a platform like Localazy helps cut these costs. Automated QA, in-context previews, and built-in reviewer workflows remove much of the manual effort, cutting both time and spend. Instead of hiring and managing freelancers or agencies, you can handle everything in one place.

How long does localization testing take? 🔗

For small apps, think a few hours per language. For bigger localized products (like games or complex platforms), it can take days for each language. Either way, catching errors before launch is always faster than fixing them afterwards.

What’s the difference between localization testing and QA testing? 🔗

QA looks for things that break your product. Localization testing looks for things that break the experience: the language, culture, layout, and flow, which is the stuff that makes users feel “this was built for me.”